Attention-Driven Metapath Encoding in Heterogeneous Graphs

Dec 30, 2024· ·

1 min read

·

1 min read

Calder Katyal

Abstract

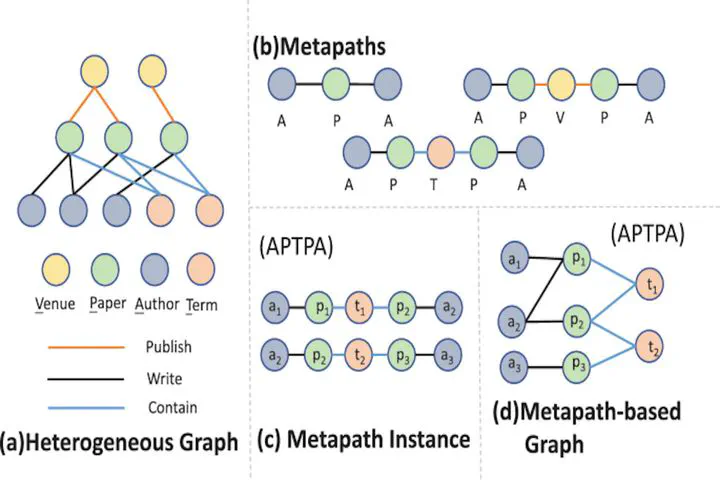

One of the emerging techniques in node classification in heterogeneous graphs is to restrict message aggregation to pre-defined, semantically meaningful structures called metapaths. This work is the first attempt to incorporate attention into the process of encoding entire metapaths without dropping intermediate nodes. In particular, we construct two encoders: the first uses sequential attention to extend the multi-hop message passing algorithm designed in Wang et al. to the metapath setting, and the second incorporates direct attention to extract semantic relations in the metapath. The model then employs the intra-metapath and inter-metapath aggregation mechanisms of Wang et al. We furthermore use the powerful training scheduler specialized for heterogeneous graphs that was developed in Wong et al., ensuring the model slowly learns how to classify the most difficult nodes. The result is a resilient, general-purpose framework for capturing semantic structures in heterogeneous graphs. In particular, we demonstrate that our model is competitive with state-of-the-art models on performing node classification on the IMDB dataset, a popular benchmark introduced in Lv et al.

Type

In this paper, I propose and implement a novel heterogeneous graph neural network architecture for CPSC483 at Yale. The model implements an original attention-based mechanism to extract features from semantically meaningful relations (“metapaths”) inside a graph. I derive key results and conduct extensive testing of the model, documenting it in the detailed report.